(UroToday.com) The 2023 ASTRO annual meeting included a session on exploring ethical and legal implications of artificial intelligence in medical practice, featuring a presentation by Dr. Skyler Johnson discussing ethical considerations of artificial intelligence chat bots--ChatGPT and beyond.

Dr. Johnson started by emphasizing that it is important to explore the ethical implications for physician users in radiation oncology and cancer patients in the context of artificial intelligence chatbots like ChatGPT. For his talk, Dr. Johnson noted the importance of critically examining the potential benefits and challenges posed by artificial intelligence chatbots in the medical field. Acknowledging that a talk on the utilization of ChatGPT could be an extensive collection of presentations, Dr. Johnson noted that the key topics for this talk include three key steps for physicians:

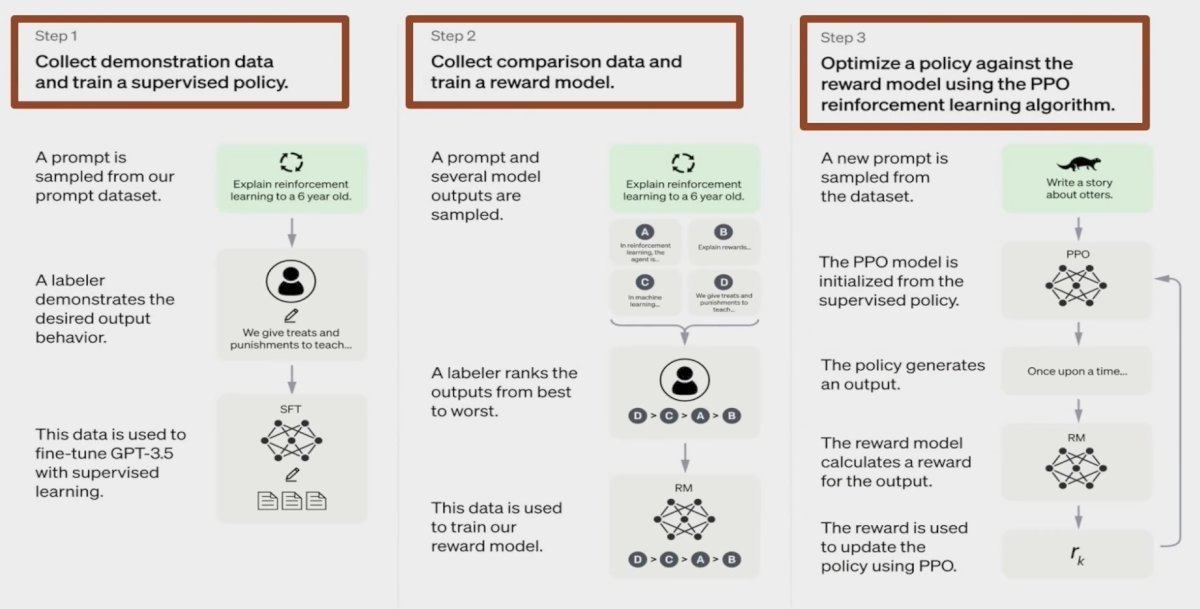

- Collect demonstration data and train a supervised policy

- Collect comparison data and train a reward model

- Optimize a policy against the reward model using the PPO reinforcement learning algorithm

In general, ChatGPT is an advanced artificial intelligence chatbots powered by natural language processing, which is designed to generate human-like responses to user queries, emulating natural conversations. There are several key capabilities of ChatGPT:

- Natural language processing: ChatGPT can understand and respond to human language with contextual understanding

- Large language model: It is based on a massive language model trained on vast amounts of text data

- Human-like responses: ChatGPT generates responses that mimic human communication

ChatGPT finds applications in a wide range of fields, including healthcare, education, and more. Additionally, it can assist with tasks such as generating text, summarizing content, and providing answers to questions. ChatGPT has the potential to fundamentally change the way humans interact with technology, enabling more natural and conversational interactions with artificial intelligence systems. Importantly, there are several limitations to ChatGPT:

- Accuracy and reliability: ChatGPT’s responses may not always be accurate or reliable, especially in technical or critical context. The accuracy and trustworthiness of artificial intelligence are crucial for widespread adoption

- Factors affecting responses: The accuracy of ChatGPT’s responses may depend on its training material and understanding of the user’s question. The program does not always ask clarifying questions, making it prone to assumptions

- Deceptive responses: ChatGPT can generate responses that convincingly resemble accurate information, even if it’s false. Medical professionals and users must exercise caution when relying on ChatGPT’s responses

- Need for accuracy review: Caution is advised, and ChatGPT should be used in tasks that allow for accuracy review. Continuous scrutiny of references and citations provided by ChatGPT is essential

- Ethical considerations: Ethical concerns arise when relying on artificial intelligence chatbots for information that can impact decisions, especially in healthcare. The potential for misinformation underscores the need for responsible usage

Dr. Johnson notes that there are several considerations for ChatGPT in radiation oncology:

- Risks for medical professionals: radiation oncologists often deal with highly technical queries and rely on accurate information for treatment decisions. ChatGPT’s deceptive behavior poses serious risks to medical professionals in this field

- Challenges faced: ChatGPT can provide technically incorrect information, with explanations that appear scientifically sound. The possibility of incorrect radiation therapy decisions due to misleading information is a substantial concern. For example, ChatGPT might generate false technical details related to radiation therapy protocols or equipment, and these details, if not identified, can lead to treatment errors or suboptimal patient care

- Implications: Deceptive responses by ChatGPT in radiation oncology highlight the need for stringent accuracy and reliability measures. The reliability of artificial intelligence tools in critical medical contexts must be carefully evaluated before integration

With regard to prudent usage of ChatGPT, there are several important considerations:

- Recommended guidelines for use: consider employing ChatGPT in tasks that allow for a review of accuracy and reliability, and always exercise caution when using ChatGPT for critical medical decisions

- Continuous scrutiny: The credibility of information generated by ChatGPT, including citations and references, should be continuously scrutinized. Rigorous quality assurance processes are vital in radiation oncology. ChatGPT may be useful for administrative tasks, such as drafting documentation, but with verification. We may consider using ChatGPT as a tool for streamlining non-critical aspects of radiation oncology practice

- Future developments: as natural learning process models evolve, they may become more reliable in clinical implications, including radiation oncology. However, until conclusive evidence of reliability exists, we should err on the side of caution

- Avoiding misuse: We must ensure that all outputs generated by ChatGPT are subject to meticulous scrutiny before dissemination to patient or others. We must prevent the use of ChatGPT for tasks that require complete accuracy and precision in radiation oncology

In a piece by Ebrahimi et al., they asked “Would it be wise to consider the use of a technology that may not consistently convey factual information?”1 As such, in the absence of conclusive evidence of the dependability of such QA processes, it is crucial that all outputs generated by ChatGPT be subjected to meticulous scrutiny before dissemination to patients or others. Additionally, we should exercise caution and avoid using them for generating scientific work and clinical decision-making. Recent work from Dr. Johnson’s group assessed the use of ChatGPT to evaluate cancer myths and misconceptions.2 In this study, they assessed the NCI webpage ‘Common Cancer Myths and Misconceptions’, and outputs generated by ChatGPT were blinded along with the NCI’s answers and subsequently reviewed. There was 97% accuracy but reviewers agreed that some of the ChatGPT’s answers were vague and unclear, resulting in concern that these answers could be interpreted incorrectly by patients. As such, this study provides valuable and well-timed insights into the capabilities and limitations of ChatGPT in the context of cancer-related information.

Importantly, ChatGPT may be used by radiation oncologists for many administrative tasks:

- Streamlining workflows: ChatGPT has the capacity to streamline tedious administrative tasks, reducing the administrative burden on radiation oncologists. For example, ChatGPT can assist in composing letters, drafting documentation, and generating clinical notes. It can quickly handle tasks like proofreading, grammatical correction, and structural formatting

- Customizable and responsive: ChatGPT is highly responsive to direction, allowing it to adapt its approach as requested. This adaptability can be beneficial in tailoring administrative tasks to specific needs

- Compliance and data deidentification: Recent studies suggest that ChatGPT can help in deidentification of patient data and remain compliant with HIPAA requirements, and it should be used on local networks to ensure data privacy

Furthermore, there are important considerations for privacy and compliance in radiation oncology:

- Local network usage: to ensure patient data privacy, ChatGPT should be used on a local network rather than external platforms

- HIPAA compliance: ChatGPT can assist in deidentification of patient data and remain compliant with the Health Insurance Portability and Accountability Act (HIPAA). We should ensure that it is used in a manner consistent with HIPAA regulations

- Data security: implement robust data security measures when integrating ChatGPT into radiation oncology practice, and protect patient information from unauthorized access

- Ongoing compliance monitoring: we must continuously monitor and update compliance measures to adapt to changing regulations. Additionally, we must regularly review and assess the security of ChatGPT’s usage in the radiation oncology setting

Importantly, ChatGPT is going to be relevant in education and training:

- Personalized learning: ChatGPT can provide personalized education by adapting teaching materials to specific teaching styles and learners’ unique needs

- Tailored content: ChatGPT can customize learning materials based on the audience’s level of expertise, whether they are undergraduates, graduates, or specialists

- Generating educational content: ChatGPT can generate quiz questions to test learner’s understanding of radiation oncology topics, and it can assist in creating educational content and materials for various educational purposes

- Interactive learning: unlike traditional methods, ChatGPT engages learners in a dialogue, promoting critical thinking and active learning. Additionally, it can answer specific inquiries, explain concepts, and offer real-time feedback on written work

ChatGPT should be used to complement human instructors and not replace them:

- The value of human instructors: human instructors offer unique benefits, including mentorship, personalized guidance, and nuanced teaching. Additionally, they provide essential elements of education and cannot be replaced by artificial intelligence

- ChatGPT’s role: it fills gaps in education by providing quick responses, customized content, and real-time feedback. It can support instructors in managing educational tasks efficiently

- Prompt and customized responses: ChatGPT offers prompt responses to student inquiries, facilitating faster learning. It can adapt teaching materials to specific teaching styles and learner’s needs

- Balancing technology and human expertise: The combination of artificial intelligence tools like ChatGPT and human instructors creates a balanced and effective educational environment that can harness the strengths of both technology and human expertise. Collaboration between ChatGPT and human instructors ensures that students receive a well-rounded and effective education in radiation oncology

Dr. Johnson notes that there are several important future research directions related to ChatGPT:

- Clinical decision support: enhancing treatment planning and quality assurance

- Academic and educational support: artificial intelligence-based educational tools, adaptive learning, and artificial intelligence-enhanced curriculum development

- Administrative efficiency: automated documentation, natural language interfaces, and data analytics and reporting

- Ethical and regulatory considerations: ethical guidelines for ChatGPT use, regulatory compliance, and safety audits

- Patient education and communication: interactive patient education, patient-provider communication, and health literacy enhancement

Dr. Johnson concluded his presentation discussing ethical considerations of artificial intelligence chat bots such as ChatGPT and beyond with the following take-home messages:

- ChatGPT offers valuable contributions to radiation oncology education and administrative tasks

- Ethical considerations and limitations must guide its responsible use in critical medical contexts

- Future developments may enhance reliability, but rigorous evaluation is essential

Presented by: Skyler Johnson, MD, University of Utah Huntsman Cancer Institute, Salt Lake City, UT

Written by: Zachary Klaassen, MD, MSc – Urologic Oncologist, Associate Professor of Urology, Georgia Cancer Center, Wellstar MCG Health, @zklaassen_md on Twitter during the 2023 American Society of Radiation Oncology (ASTRO) Annual Meeting, San Diego, CA, Sun, Oct 1 – Wed, Oct 4, 2023.

References:

- Ebrahimi B, Howard A, Carlson DJ, et al. ChatGPT: Can a Natural Language Processing Tool Be Trusted for Radiation Oncology Use? Int J Radiat Oncol Biol Phys. 2023 Aug 1;116(5):977-983.

- Johnson SB, King AJ, Warner EL, et al. Using ChatGPT to evaluate cancer myths and misconceptions: Artificial intelligence and cancer information. JNCI Cancer Spectrum. 2023;7(2).doi.org/10.1093/jncics/pkad015